FireFly A Synthetic Dataset for Ember Detection in Wildfire

Published:

Introduction & Background

This work was done in collaboration with Yue, Hu under the guidance of Prof. Peter Beerel at the EESSC Group.

The paper was presented as a poster at the Artificial Intelligence for Humanitarian Assistance and Disaster Response Workshop (AI + HADR - link).

I joined the team as a Directed Research student in Fall 2022, given my experience with building apps and simulations in Unity and UE4, and continued work through my graduation in May 2023. Specifically, below are my contributions to the project:

- Constructed Unreal Engine environments and graphics pipelines to generate photorealistic synthetic geospatial data

- Programmed simulations and wrote data processing pipelines to handle diverse datasets by varying scene parameters

- Wrote scripts for training/evaluating various computer vision models (like YOLO and Sparse-RCNN) using transfer learning, for object detection

- Explored generation of infrared views and aireal views to track fires

While the main goal was to utilize this data in combating wildfires, our proposed system can be easily extended for edge inference, allowing us to build better UAVs and autonomous vehicles.

Details

As is evident from the description, our main focus was to build a pipeline system that can generate synthetic data out of game engines for ML Inference.

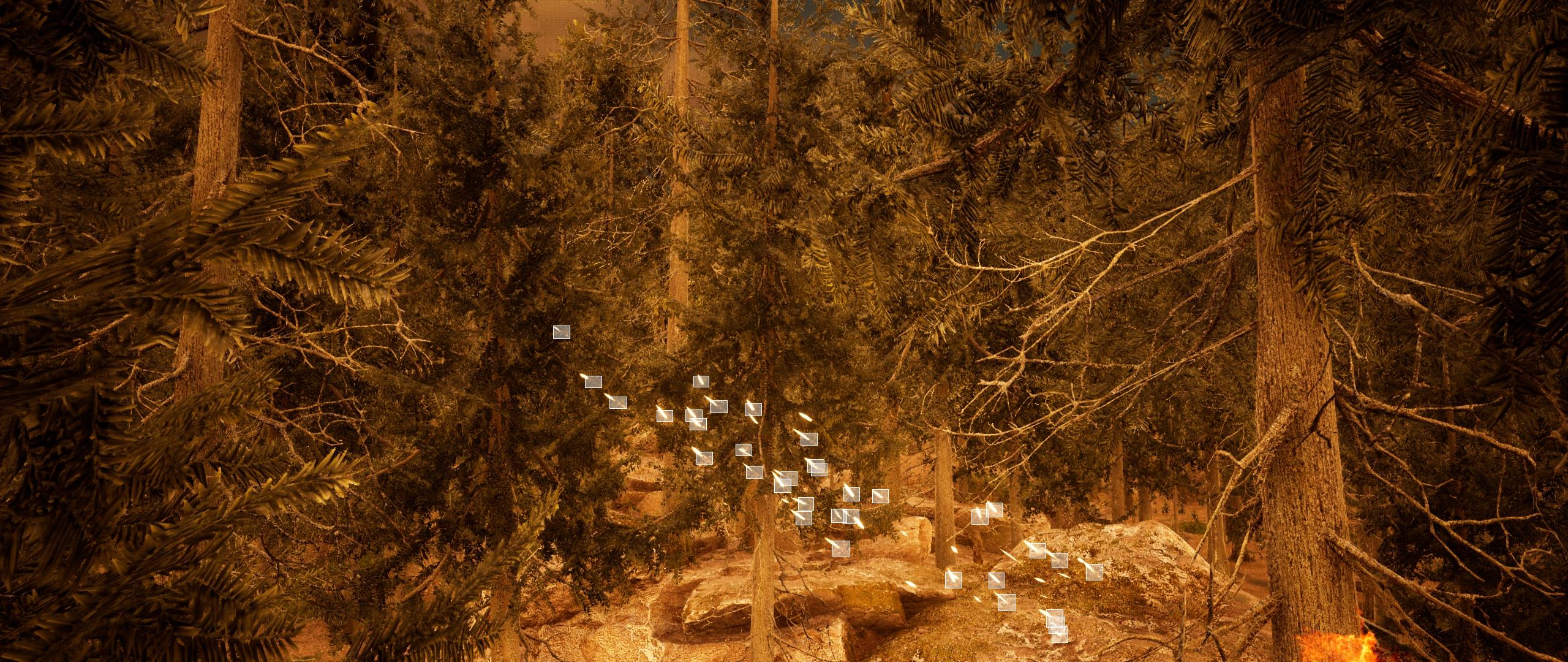

For this, we start by building a UE scene with a forest (given our target usecase - wildfires), and setup particle system using Niagara to mimic a fire burning with embers.

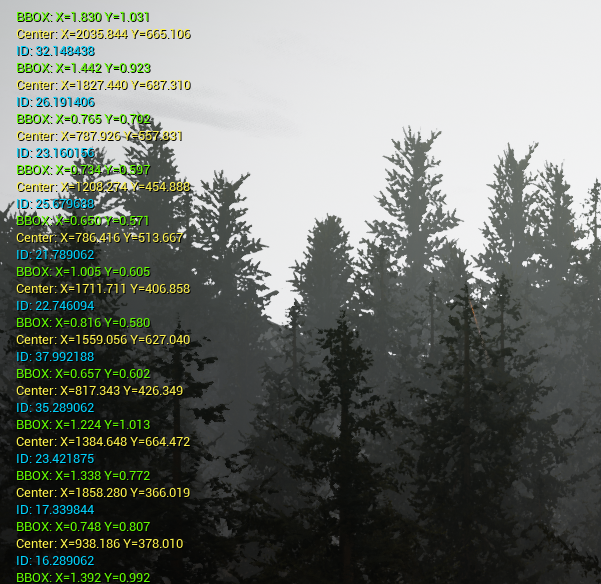

We then capture real-time data to track each embers across frames using their world-space coordinates. This data looks something like this:

After processing, we end up with a frame-level data like this:

Frame: 2

CLASS: Embers

ID: 0.0

Center: X=1251.366 Y=690.728

BBOX: X=1.615 Y=1.183

CLASS: FIRE

ID: 0.0

Center: X=2193.368 Y=1239.878

BBOX:X=4274.819 Y=3975.728

This data is used to generate ember boxes for training, and we apply masks to validate if these are accurate. Below is a sample screen:

This is then converted into a COCO format JSON, with the below fields:

{

"info": [...],

"images": [...],

"categories": [...],

"annotations": [...]

}

In these:

imagescontains a list of all images in the data, with details like height, width, date captured and file-name.categorieshas a list of categories. We set 1 for type fire, and 2 for type embers.annotationsis a list of annotations (bounding boxes) for each image and each ember/fire within that image. We also assign IDs to each ember to track them across multiple frames.

This JSON is now fed to our ImageNet based models to train on, like YOLO and Sparse-RCNN. We validated the training by checking on a real-world dataset.

Other Experiments

Given our goal to extend it to drones, we built a scene to track aerial views:

We also explored using infrared views to see if we could use thermal sensing to better track the embers

Citation

Hu, Yue, Xinan Ye, Yifei Liu, Souvik Kundu, Gourav Datta, Srikar Mutnuri, Namo Asavisanu, Nora Ayanian, Konstantinos Psounis, and Peter Beerel. “FireFly: A Synthetic Dataset for Ember Detection in Wildfire.” In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3765-3769. 2023.

Download the paper here